How Safe Are Nuclear Power Plants?

A new history reveals that federal regulators consistently assured Americans that the risks of a massive accident were “vanishingly small”—even when they knew they had insufficient evidence to prove it.

By Daniel Ford August 13, 2022 The New Yorker

Keeping good records is a cardinal rule of bureaucracy, and at government agencies routinely hiding the sensitive ones, and utterly suppressing the most embarrassing ones, is the prevailing general imperative. In the United States, outsiders with resources and persistence, such as activists and journalists, can try to use the legal crowbar of the Freedom of Information Act to pry facts loose. But there is an easier way to look at government secrets and even peruse them at leisure: just be appointed an official government historian. There are hundreds of them working for the various federal agencies in the U.S., salary and benefits decent. Their job is to sift, with scholarly thoroughness, through raw, archival evidence, in order to determine how well or badly an agency has carried out its mission. Because these histories are usually published, in installments, long after the events they describe, agencies somehow relax. They permit quasi-independent, in-house scholars to poke through their business, potentially releasing information that would have brought the agency to its knees if it had been revealed in more timely fashion.

Thomas Wellock, formerly a professor at Central Washington University, became the historian of the U.S. Nuclear Regulatory Commission (N.R.C.) more than a decade ago. He brought chops to the job—training in engineering, experience testing nuclear reactors, and a Ph.D. in history from Berkeley—and, in March of 2021, published the sixth in a series of authorized volumes about how the agency, and its predecessor, the U.S. Atomic Energy Commission (A.E.C.), has regulated civilian nuclear power. “Safe Enough? A History of Nuclear Power and Accident Risk” is a refreshingly candid account of how the government, from the nineteen-forties onward, approached the bottom-line question posed in the book’s title. Technically astute insiders at the A.E.C. took it for granted that “catastrophic accidents” were possible; the key question was: What were the chances? The long and the short of it, Wellock’s book suggests, is that, while many officials believed the chances were very low, nobody really knew for sure how low they were or could prove it scientifically. Even as plants were being built, the numbers used by officials to describe the likelihood of an accident were based on “expert guesswork or calculations that often produced absurd results,” he writes. The “guesswork” nature of such analysis was never candidly acknowledged to either the public or the agency’s licensing boards, which had the legal responsibility of determining that individual plants all around the country were safe enough to be approved for operation.

The U.S. nuclear-construction program collapsed decades ago, in part because of chronic cost overruns, but there are still ninety-two aging nuclear reactors operating in the U.S., and many utility companies want to extend their original forty-year licenses by another twenty years. Around the world, more than four hundred reactors are in operation, most of them using U.S. designs, or similar ones, from the sixties and seventies, which have documented flaws that are not easily correctable. The nuclear reactors at Fukushima Daiichi, Japan, for example, where meltdowns occurred in 2011, were designed by General Electric; there are thirty-one plants of the same basic G.E. vintage currently operating in the U.S. Wellock discloses internal records describing specific and potentially urgent safety issues, still unresolved, that pertain directly to many of the nuclear plants operating downwind of major population centers. Some of the foreign operators who rely on U.S. reactor designs may read his book and wonder whether there’s a refund policy.

In recent years, as mitigating climate change has become a high priority, some energy-policy experts have argued that we should return to building nuclear plants, or at least have the federal government sponsor research on new types of reactors with better safety and performance features. In principle, nuclear energy remains an appealing technology, assuming that the problem of long-term radioactive-waste disposal can be solved. And yet my own studies on reactor safety—which include papers co-authored with the late M.I.T. physicist and Nobel laureate Henry Kendall, and several books based on extensive reporting for this magazine—have concluded that nuclear power’s potential contribution to clean energy has been compromised by safety shortcuts taken by the industry, and by lax government regulation of day-to-day safety practices at the plants. (I appear in Wellock’s book, in part because I served as the executive director of the Union of Concerned Scientists—an organization that became “nuclear power’s most effective critic,” Wellock writes—from 1972 to 1979.) Studying Wellock’s history, which shows what the government got wrong about nuclear power, might be helpful in figuring out what the Biden Administration needs to get right if it is to attempt to revive the prospects for nuclear energy. His book also prompts us to ask whether such a revival is desirable, given that technologies exist for harnessing the sun and wind which don’t raise daunting safety issues in the first place.

Atoms for Peace, the postwar federal nuclear-power program announced by President Dwight Eisenhower, in 1953, aimed to turn swords into plowshares. Nuclear power was presented as the energy source of the future, to be delivered at a price “too cheap to meter.” At first, it was hard for the program to get off the ground, in large part because coal, the dominant source for U.S. power production, was already cheap and abundant. But a combination of government pressure and loss-leader pricing by nuclear-plant manufacturers led to the creation of a nuclear bandwagon in the nineteen-sixties. (In the seventies, an executive with the utility company Florida Power & Light told me that his company had adopted nuclear power so that its leaders wouldn’t be embarrassed on the golf course by other C.E.O.s who had done so.) In the early seventies, the A.E.C. predicted that there would be a thousand nuclear reactors operating in the country by 2000—an estimate that would turn out to be off by a thousand percent, give or take.

During the Second World War, the building of nuclear reactors was exclusively the province of the military. The government, Wellock notes, thought that it could resolve, or at least skirt, the technology’s biggest safety issues by building reactors in the desert of Washington State, “in the middle of sagebrush and rattlesnakes.” But utility companies, keen to avoid constructing expensive long-distance power lines, later argued for locating nuclear power plants closer to their customers in urban areas. In the early sixties, New York’s Consolidated Edison proposed building a large nuclear plant in Ravenswood, Queens—now part of Long Island City—about two miles from Times Square. There were protests, including from the former A.E.C. chairman David Lilienthal, and the project was nixed. But a nuclear-plant complex remained under development by Con Ed at Indian Point, near Croton-on-Hudson, some thirty-seven miles away. The A.E.C.’s top advisers questioned whether such reactors could be safely operated so close to metropolitan areas. Wellock cites internal records showing that, in a draft letter written in the late sixties, the A.E.C.’s principal reactor-safeguards committee warned the agency’s top leadership that, unless new emergency cooling technology could be developed, future nuclear reactors were “suitable only for rural or remote sites.”

The remote-siting approach not only threatened government ambitions for nuclear power by adding to the costs but was a telltale sign of its inherent dangers; nevertheless, the A.E.C. wanted to let plans for building hundreds of large nuclear plants near urban areas move ahead. Wellock finds that the agency’s commissioners intervened to stop the issuance of the letter. (Samuel Jensch, who chaired the committee that licensed the Indian Point plant, later wrote that licenses for many facilities might have been denied if the government had been forthcoming about its internal concerns; the furnishing of this eye-opening information to the licensing boards, he suggested, could well have meant the end of commercial nuclear power.) From the sixties onward, the A.E.C. used talking points that presented the risks of catastrophic nuclear accidents as “exceedingly low” and “vanishingly small.” Meltdowns—accidents in which the uncontrolled overheating of a reactor’s nuclear fuel creates the potential for the dispersal of radioactive fallout over a large area—were characterized as “incredible events” that, for all policymaking purposes, could be treated as impossible. A meltdown was supposed to be so unlikely that citizens didn’t have to lose sleep worrying; plant designers, similarly, were allowed to focus exclusively on preventing lesser mishaps, such as temporary cooling-system malfunctions, and relieved of the responsibility for building safety systems to try to mitigate accidents in which cooling was lost and could not be restored quickly enough.

For those who are deeply concerned about the safety of nuclear power, Wellock’s book paints a disturbing picture. The technical challenge of assuring nuclear safety, and of numerically calculating the risk of a bad accident, was at the basis of many uncertainties. “A nuclear power plant’s approximately twenty thousand safety components have a Rube Goldberg quality,” Wellock writes. “Like dominoes, numerous pumps, valves, and switches must operate in the required sequence to simply pump cooling water or shut down the plant. There were innumerable unlikely combinations of failures that could cause an accident.” And yet, even as plants were beginning to be built in larger numbers, regulators were realizing that they didn’t have the tools they needed to make reliable safety estimates. If you have substantial data, risks can be calculated easily. Insurance companies, which pioneered the statistical techniques of risk analysis, look at how often accidents of specific types occur under various circumstances. But the nuclear-power industry that emerged in the fifties and sixties was building big plants before it had developed a track record of operating smaller ones. It had no statistics about the safety of the large units it was constructing—novel, complex, custom-built machines. Stephen Hanauer, a senior federal regulatory official at that time, who held a Ph.D. in physics, summarized this “uncomfortable reality” in numerous internal memos. He duly sent them to other A.E.C. officials, often lawyers and political appointees—not scientists—who then filed them away.

Often, nuclear plants were designed by firms that had never done the job before and operated by utility companies that had little experience in doing much beyond burning coal and stringing transmission wires. Shrewdly, the industry sought to protect itself from the risks it might be imposing on others: it refused to consider building large numbers of plants until 1957, when Congress passed the Price-Anderson Act, which effectively granted it blanket protection from paying the full cost of potential liabilities should accidents occur. To make matters even dodgier, the emerging nuclear industry operated under a loose regulatory framework. The A.E.C. issued little more than “General Design Criteria” for the industry to follow. The edition of the criteria published in 1965 included a pious edict that “heat removal systems” be provided. But the industry received little guidance on the question of how this difficult feat might be engineered; the agency trusted the industry to figure it out, and largely rubber-stamped the designs it proposed. All the while, the plants themselves were getting jaw-droppingly big. The first nuclear reactor, built in 1942 beneath a football stadium at the University of Chicago, had barely been able to power a light bulb—but now the government wanted reactor complexes that could power all of Chicago. As one A.E.C. chairman put it, nuclear power was being transformed, in just twenty years, from the Wright brothers’ Kitty Hawk flyer into a Boeing 747. Depending on your point of view, this rambunctious approach was either a laudable alternative to incremental technological advancement or imprudent, expensive, and reckless.

Although government experts couldn’t nail down the probability of an accident, they could use straightforward arithmetic to predict the damage that might result. The results were presented in a 1957 study by the A.E.C.’s Brookhaven National Laboratory. The study, which drew on research on the impact of ionizing radiation, conducted after the bombings of Hiroshima and Nagasaki, indicated that a worst-case scenario for a major accident at what was then considered a large nuclear plant could cause thirty-four hundred deaths and seven billion dollars in property damage—about seventy-four billion dollars in today’s money. Eight years later, in 1965, Brookhaven updated its analysis of a worst-case scenario. Nuclear plants had grown in scale, and the implications were devastating: a meltdown could cause forty-five thousand deaths, with radioactive contamination creating a potential “area of disaster the size of the State of Pennsylvania.” When the 1965 update landed at A.E.C. headquarters, Wellock writes, “The commission opted to suppress the results. For the next eight years, the draft update sat like a tumor in remission in A.E.C. filing cabinets, waiting to metastasize.” It was made available only in 1973, after the Chicago attorney Myron Cherry demanded its release.

In the gallows humor of the nuclear-engineering community, the kind of mishap that could lead to catastrophe was referred to as the China Syndrome: the idea was that a major malfunction could cause a white-hot blob of molten uranium to form, and that this blob would begin to sink into the earth, heading in the general direction of China. According to Wellock, such a scenario became an obsession for the government, starting in the late sixties—posing, as it did, a public-relations problem that could kill the nuclear-power program, especially given the rise of the environmental movement. James Schlesinger, an economist appointed by President Richard Nixon as the A.E.C. chairman in 1971, worried that the agency—which was then being challenged about the scientific basis of its nuclear-safety claims—lacked satisfactory answers, and he supported a major inquiry into accident probability. But the study, which was finished in 1975, didn’t put the safety question to rest, and, for its critics, its shortcomings underscored how much the government didn’t know. The N.R.C. was later forced to repudiate its widely publicized findings. According to notes taken by an agency staffer named Thomas Murley, and obtained by Wellock, there was a debate about letting “the chips fall where they may”—that is, releasing whatever results the experts reported. Schlesinger and others urged caution; at one early point, he advised staffers working on the report to “keep all references to death, injuries or property damage in the vaguest possible terms.”

Wellock’s book proffers no evidence that anyone inside the A.E.C. was involved in a criminal conspiracy to hide the risks posed by nuclear plants; he is the agency historian, after all, and not a prosecutor. Instead, there seemed to be an abundance of hubris and cult-like true-believing in the idea of a nuclear future in which the risk of a catastrophic accident was accepted, as a matter of doctrine, to be very low. “We all believed our own bullshit back then,” Murley, who went on to become a director of nuclear reactor regulation at the N.R.C., told me. The hope, for some, was that progress was being made, or could somehow be counted on, through the agency’s ongoing safety-research program, to get technology, such as reliable emergency-cooling systems, developed and proved. Officials also consoled themselves with the belief that even if one safety system didn’t work, the danger of catastrophic glitches could be lowered through a piling on of many safety systems. Putting a nuclear reactor inside some kind of strongbox, for example, which might be able to contain radioactive materials in the event of a mishap, was one of the earliest and most obvious ideas. Officials then realized that, as reactors were scaled up, a steel-and-concrete strongbox was not enough—additional backup systems were required. Regulators feared that an event such as an earthquake might disable both the primary and the backup systems, allowing large quantities of radioactive debris to be carried away on the wind. Even more mundane weaknesses with the setup were discovered: containment buildings might not function as intended if, for example, they were left open to permit maintenance and other routine tasks; if an accident occurred suddenly, the openings might not be closed quickly enough.

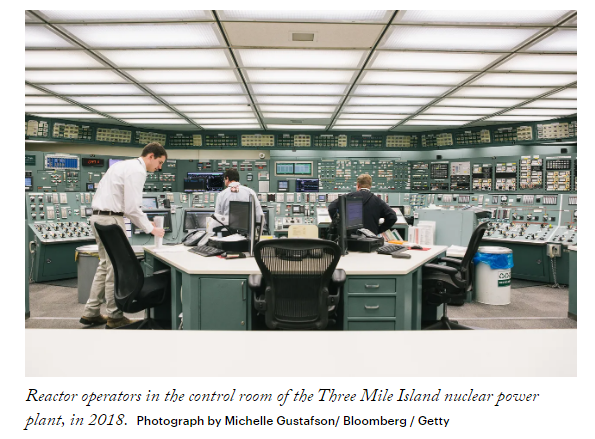

Containment buildings did prove helpful during the Three Mile Island disaster, in 1979, and at Fukushima, in 2011, as the physicist Frank von Hippel, of Princeton, has pointed out. The containment facilities at the Japanese plant, while not leak-tight, reduced the release of radioactive materials and provided enough time for more than a hundred thousand people to escape immediate harm from the fallout. On the other hand, more than a decade later, some thirty-five thousand people are unable to return to their homes, many of which have been contaminated with cesium-137, a long-lived radioactive substance that emits intense gamma radiation and raises the risk for cancer. Perhaps most alarmingly, the accident at Fukushima nearly caused a fire in a spent-fuel storage pool that was outside the strongbox. According to recent analyses by von Hippel and others, if that had happened, the release of radioactive material could have multiplied a hundredfold, potentially requiring the relocation of as much as a quarter of the Japanese population, depending on which way the winds were blowing. Such an outcome would have threatened metropolitan Tokyo, a hundred and fifty miles away.

Acareful reader of Wellock’s book urgently wants him to nail down an answer to the underlying question: What is the actual risk, today, of a potentially catastrophic nuclear-plant accident? Wellock dodges the issue in deference to its technical difficulty, and perhaps as a subtle indication that he is still working for the government. (During my on-the-record interview with Wellock, an N.R.C. public-relations officer sat in, and said that Wellock could answer questions about historical matters but not current agency policy.) Wellock’s book notes that some analysts have put forward rough statistics based on the history of the worldwide nuclear industry: the world’s reactors have now been in operation for more than fourteen thousand “reactor-years,” and to date there have been “five core-damage accidents”—Three Mile Island, Chernobyl, and the three reactors at Fukushima. These numbers, in a back-of-the-envelope sense, suggest that the world should expect one full or partial meltdown every six to seven years. If that estimate is plausible—Wellock presents no challenge to it—then the worldwide nuclear program is slightly overdue for its next big surprise. Another study, published in the Bulletin of the Atomic Scientists in 2016, reached the similar conclusion that the “overall probability” of a meltdown in the next decade was almost seventy per cent.

In 1982, I wrote in this magazine about the risk of another major accident following the one at Three Mile Island. At that time, I did the same sort of math. The numbers suggested that another major nuclear accident would come due in about three years. The Chernobyl disaster occurred roughly on schedule, four years later, in 1986. By such figuring, with no crystal ball required, Fukushima was a bit late in arriving. But since it involved three meltdowns, the quick calculation that I had made still proved a good-enough indicator of how much risk the world is running. The next meltdown, arithmetically speaking, is just around the corner; the only issue I cannot resolve is where it will occur. At Diablo Canyon, in California? At one of the French nuclear reactors in its pretty countryside? I claim no clairvoyance, but in 1986, I was on NBC News, being interviewed about the Chernobyl accident, and Tom Brokaw asked which U.S. nuclear plant I thought might become our Chernobyl. I guessed the Davis-Besse plant, which was near Toledo, Ohio, because of various reports I had seen about sloppy safety practices there. It did not have a meltdown—though, the next day, the utility company that owned it had one, from the publicity my remarks generated. But in 2002, it was revealed that undetected corrosion had created a grapefruit-size hole in the top of its reactor vessel and had come within a quarter of an inch of causing a major accident. The plant had to be shut down for years, and the repairs cost millions; its operating company paid the largest fine in nuclear-regulatory history, along with civil penalties and restitutions. Wellock described the problem uncovered at Davis-Besse as “one of the most potentially dangerous events in US commercial nuclear power history.”

After the Three Mile Island incident, a Presidential commission, chaired by John Kemeny, the mathematician and president of Dartmouth College, issued a sobering report. It expressed amazement that risky maintenance was being performed on the reactor’s cooling system while the plant was operating rather than when it was safely shut down, and remarked on how high-school graduates, who’d received little more than a crash course in the basics of reactor oversight, were allowed to be in charge of the nuclear facility. (The N.R.C. did not require an engineer to be part of the crew operating a nuclear power plant.) Among other recommendations, the committee urged a return to the early practice of siting reactors away from cities. Murley, when he became the director of nuclear-reactor regulation at the N.R.C., took this suggestion seriously, and he told me that in the early nineties he instructed his staff to develop new rules for where to build nuclear plants; in keeping with the informal coöperation that existed between his agency and international regulatory authorities, they consulted with their European counterparts to get their views. “The Europeans went batshit crazy,” Murley said. High population densities in Europe meant that the proposed remote-siting rules would be difficult to follow; the rules could also call the safety of existing nuclear plants into question. The new regulations were not enacted. Murley said that he got a call from Ivan Selin, the chairman of the N.R.C. “He advised me very quietly to drop it,” Murley told me. He added, “I don’t think I told Tom Wellock that story.”

Wellock’s book also doesn’t explore the further question of whether nuclear power is truly something we want more of, to mitigate global warming. That’s understandable—the question goes far beyond Wellock’s mandate as the agency’s historian—but it’s on many people’s minds. One argument, which has been floated among supporters of a revived nuclear program, is that it was a grave mistake for the critics of nuclear power in the seventies and eighties to overlook the impending climate crisis. Some of these nuclear-revival supporters have suggested that the safety regulations put in place after the Three Mile Island disaster might have been overkill, and contended that greater costs imposed by these rules on the industry led to the decline in nuclear-plant construction.

As someone who wrote about the issue back then, I can perhaps add some perspective. I did not neglect the CO2 problem in my own investigation of nuclear risks, which began in 1970, at the Harvard Economic Research Project; in fact, it was because of the air pollution caused by fossil-fuel emissions and the threat of global warming that we were studying nuclear power, asking whether it might be ideal for generating electricity. In a series of papers, Henry Kendall and I attempted to analyze the risks of nuclear power plants compared with the known risks created by continuing to burn fossil fuels. It is naïve, of course, to expect that public policy would be based on the careful weighing of costs and benefits. When decisions get made by the government, they are often made by executive fiat, driven partly by the lobbying of corporations, with only tangential input from the public or independent experts. The only real brake on the system is economic. Ultimately, the nuclear program was effectively halted not by academic nitpicking or protests by Sierra Clubbers but by economic realities: construction and operating costs for nuclear plants simply became untenable, even for utility companies, which were allowed to pass them on to consumers.

By the seventies, Con Ed and others had realized that nuclear power was not, in fact, “too cheap to meter.” Building plants was an engineering nightmare. Companies had to pay for cost overruns and delays caused by the fact that construction was often started without a finished design; work had to be done, torn down, and redone until the débutant builders cobbled the plants together. In fact, the death certificate for the U.S. nuclear industry can be dated precisely to the summer of 1974. U.S. interest rates were approaching the double digits, and utility companies, en masse, stopped ordering nuclear units; cancelled their outstanding contracts for new ones; and even scrapped nuclear-construction projects that were already well under way. This entailed losses of hundreds of billions of dollars for the utilities and led to a cascade of unprecedented defaults in the nuclear industry. No new nuclear plants would be ordered in the U.S. for thirty-four years.

In recent decades, the few forays into nuclear-plant construction—such as the ill-starred Vogtle plant, in Georgia—have turned into multibillion-dollar debacles. And, according to the data reported by the International Atomic Energy Agency, which conducts worldwide surveys of plans for future power generation, nuclear power is doomed—possibly because open-checkbook projects fit nobody’s sensible business model. A recent I.A.E.A. report estimates that nuclear power will be all but irrelevant as a world energy source in 2050, with its share of world power-generation likely falling into the single digits. Meanwhile, there has been steady progress in the development of affordable wind and solar power.

Decades’ worth of evidence testifies to the difficulty, expense, and danger of turning swords into plowshares. It’s still accruing. European utility companies, faced with reduced Russian natural-gas supplies as a result of the Ukraine war, have looked to electricity from France’s nuclear system—the Continent’s largest—as a potential godsend. And yet the promise of such help has been upended by the recent discovery of stress-corrosion cracking in pipes located in the critical cooling systems of numerous French nuclear units. A dozen reactors have been shut down, and no one knows how long it will take to fix them. It may take years. Meanwhile, the heat wave and drought in Europe this summer have forced other units to go offline, since river water flow no longer suffices as an adequate coolant. Altogether, French nuclear capacity has been effectively cut in half. The fact that nuclear power has fallen on its face when it is needed most is a hint that it is not the key to world energy security. ♦